TAPE: Task-Agnostic Prior Embedding for Image Restoration

Lin Liu, Lingxi Xie, Xiaopeng Zhang, Shanxin Yuan, Xiangyu Chen, Wengang Zhou, Houqiang Li, Qi Tian

Abstract

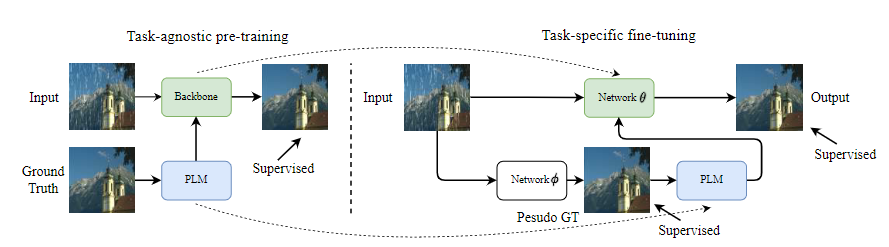

Abstract. Learning an generalized prior for natural image restoration is an important yet challenging task. Early methods mostly involved handcrafted priors including normalized sparsity, ℓ0 gradients, dark channel priors, etc. Recently, deep neural networks have been used to learn various image priors but do not guarantee to generalize. In this paper, we propose a novel approach that embeds a task-agnostic prior into a transformer. Our approach, named Task-Agnostic Prior Embedding (TAPE), consists of two stages, namely, task-agnostic pre-training and task-specific fine-tuning, where the first one embeds prior knowledge about natural images into the transformer and the latter extracts the knowledge to assist downstream image restoration. Experiments on various types of degradation validate the effectiveness of TAPE. More importantly, TAPE shows the ability of disentangling generalized image priors from degraded images, which enjoys favorable transfer ability to unknown downstream tasks.

Framework Overview